Among the 31 applications received, many of them being excellent, the Jury of the Stefano Rodotà Award 2023, composed by the members of the Bureau of the Committee of Convention 108, decided to grant

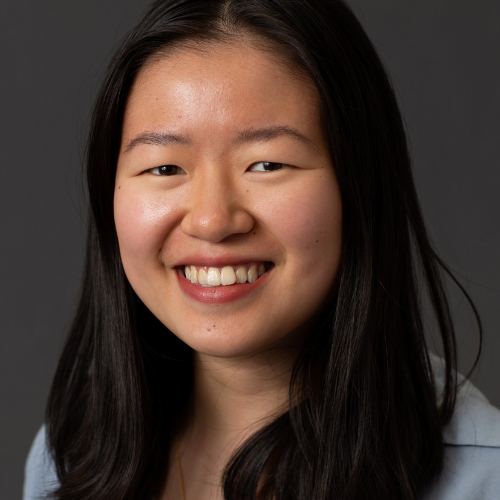

- in the “thesis works” category, Janis Wong for her thesis titled “Co-creating data protection solutions through a Commons”

- in the “articles” category, Sebastiao Bernardo Bruco Geraldes de Barros Vale, Katerina Demetzou and Gabriela Zanfir-Fortuna, co-authors of a piece of work titled “The Thin Red Line: Refocusing Data Protection Law on ADM, A Global Perspective with Lessons from Case-Law”.

- Furthermore, as the award rules allow it, the jury has decided to give a special mention to Francesca Musiani and Ksenia Ermoshina for their work « Concealing for Freedom: The Making of Encryption, Secure Messaging and Digital Liberties “

- Janis Wong's thesis aims to create a socio-technical data commons framework that helps data subjects protect their personal data. Data protection laws and technologies limit the vast personal data collection, processing, and sharing in our data driven-society. However, these tools may lack support for protecting individual autonomy over personal data through collaboration and co-creation. To address this, her research explores the creation of a data protection-focused data commons to encourage co-creating data protection solutions and rebalance power between data subjects and data controllers. Using research methods across Computer Science, Management, and Law, Janis interviewed commons experts to identify and address the multidisciplinary barriers to creating a commons, applied commons principles to a policy scaffolding to support the practical deployment of a commons, and built a commons to test its effectiveness for supporting the co-creation of data protection solutions through conducting a user study. In sum, her thesis demonstrates how a data protection-focused data commons can be an alternative socio-technical solution that supports data subject agency as part of the data protection process.

Janis is currently developing her research in ongoing work, including formalising methods that facilitate participatory data governance processes and support community-driven, co-created, collaborative data protection solutions and data stewardship in areas such as artificial intelligence, children’s rights, healthcare, real estate, and fundamental rights and freedoms.

- Ths article explores existing data protection law provisions in the EU and in six other jurisdictions from around the world - with a focus on Latin America - that apply to at least some forms of the processing of data typically part of an Artificial Intelligence (AI) system. In particular, the article analyzes how data protection law applies to “automated decision-making” (ADM), starting from the relevant provisions of EU’s General Data Protection Regulation (GDPR). Rather than being a conceptual exploration of what constitutes ADM and how “AI systems” are defined by current legislative initiatives, the article proposes a targeted approach that focuses strictly on ADM and how data protection law already applies to it in real life cases. First, the article will show how GDPR provisions have been enforced in Courts and by Data Protection Authorities (DPAs) in the EU, in numerous cases where ADM is at the core of the facts of the case considered. After showing that the safeguards in the GDPR already apply to ADM in real life cases, even where ADM does not meet the high threshold in its specialized provision in Article 22 (“solely” ADM which results in “legal or similarly significant effects” on individuals), the article includes a brief comparative law analysis of six jurisdictions that have adopted general data protection laws (Brazil, Mexico, Argentina, Colombia, China and South Africa) and that are visibly inspired by GDPR provisions or its predecessor, Directive 95/46/EC, including those that are relevant for ADM. The ultimate goal of this study is to support researchers, policymakers and lawmakers to understand how existing data protection law applies to ADM and profiling.